AI Research Feels Like Magic—Until It Leads You Astray

How AI Search Gave Me the Wrong Answer—And Why You Can’t Trust It Blindly

I had a straightforward research task: to determine whether certain companies had a presence on X (formerly Twitter).

So, I turned to Grok, the AI search tool built into X, and my paid version of ChatGPT, which has advanced research capabilities. These tools are marketed as next-generation research assistants capable of analyzing vast amounts of data and returning instant insights.

At first, AI delivered an impressive, comprehensive breakdown of company strategies, market positioning, and even detailed source links I would have never had the time to gather manually.

But then, when I asked whether these companies had active accounts on X, AI confidently returned incorrect results.

“These companies do not have an X presence.”

This seemed odd.

Why would an AI built into X not know whether an account exists on its platform?

So, I did what I should have done from the beginning: a simple manual search.

And there they were. Active accounts, verified, posting content. AI had completely missed them.

At first, I thought this was just an isolated glitch. Then I read the Columbia Journalism Review’s deep dive into AI search engines and realized this was part of a much bigger problem.

The Columbia Journalism Review Study: AI Search is a Citation Disaster

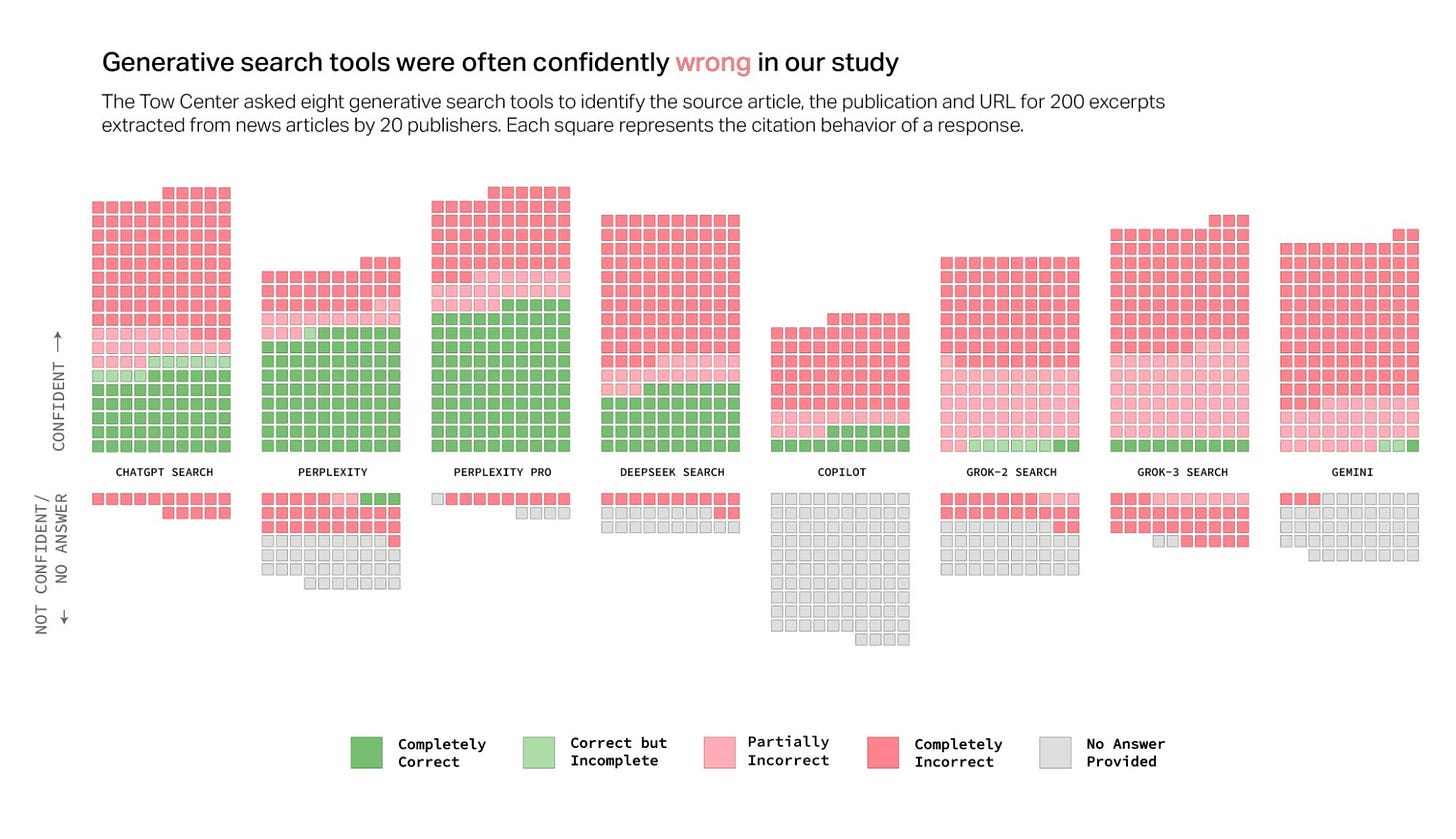

In its investigation into AI search tools, the Columbia Journalism Review (CJR) found that AI search engines:

Frequently misrepresent sources or fail to provide citations altogether.

Fabricate sources when real ones don’t exist.

Struggle to retrieve real-time or dynamic content.

Present incorrect answers with extreme confidence, making errors harder to detect.

The study analyzed eight AI-powered search engines, including tools similar to the ones I used, and found that they all exhibited these problems.

In one case, CJR found that AI search tools would generate fake news citations, referencing articles that didn’t exist. In another instance, AI misattributed real articles, crediting the wrong authors and publications.

Why AI Gets It Wrong—And Why It Matters

AI Isn’t Searching the Web Like You Think It Is

Traditional search engines like Google crawl the web in real time. AI models like ChatGPT and Grok, however, are trained on a snapshot of data and don’t always retrieve live information.

This explains why Grok failed to find company accounts on its own platform—it’s not actually running real-time searches the way a human would.

AI Prioritizes “Confidence” Over Accuracy

The CJR study highlighted that premium AI search tools—yes, the ones people pay for—were actually worse at getting things right than free versions.

Why? Because premium models are optimized to sound more authoritative. They package wrong answers in a way that makes them more believable.

AI Will Make Up Information If It Doesn’t Have It=

One of the most damning findings in the CJR study was AI’s tendency to fabricate information rather than admit it doesn’t know something.

When AI is missing data, it doesn’t stop and say, "Sorry, I don’t have enough information on that." Instead, it fills in the gaps with its best guess.

That’s exactly what happened in my case. Instead of acknowledging, "I can’t verify whether these companies are on X," Grok just said, "They aren’t."

Why This Matters for Marketers and Researchers

If I had blindly trusted AI’s answer, I would have:

Reported inaccurate findings to my team.

Overlooked real competitors who were actively marketing on X.

Based strategy decisions on false assumptions.

And this isn’t just about social media presence. AI search engines are now being used to guide critical business decisions, marketing strategies, and competitive analysis.

If AI can’t even reliably tell me whether a company exists on a social platform, how can we trust it to:

Analyze market trends?

Identify consumer behavior shifts?

Provide accurate competitor intelligence?

How to Avoid AI’s Misinformation Traps

Since AI isn’t perfect, human oversight is essential. Here’s how to protect yourself from AI research errors:

1. Always Cross-Check AI Results with Manual Searches

If AI tells you a company isn’t on X, go search X yourself. If AI summarizes an article, read the actual article before relying on it.

2. Treat AI as a Research Assistant, Not an Authority

AI is great for speed and efficiency, but it should never be your primary research method.

3. Demand Citations—And Verify Them

If AI provides a source, click through and check it. If it doesn’t provide sources, treat the answer as unverified.

4. Prioritize First-Party Data Over AI Outputs

For industry research, use official reports, analytics tools, and direct sources over AI-generated insights.

5. Train Your Team to Recognize AI-Generated Misinformation

If your marketing or research team is using AI, educate them on its limitations. The more critical they are, the better.

The MAC Takeaway: AI is a Tool, Not a Truth Machine

AI-powered research feels like magic—until it isn’t.

It’s an incredible tool for speed, efficiency, and brainstorming, but it’s not infallible. At the

), we believe in truth-seeking, transparency, and accountability—which means recognizing AI for what it is: a powerful assistant, but never a replacement for real research.So next time AI gives you an answer, remember:

🛑 Pause.

🔍 Check the facts.

✅ Verify before you trust.

Because at the end of the day, AI won’t take responsibility for mistakes—but you will.

AI Search Has a Citation Problem – Read the Full Study

📖 Columbia Journalism Review | Tow Center for Digital Journalism

🔗 Read the full article

🚀 Meet Jay – The Consultant Who Cuts Through the AI Hype

AI is transforming industries, but misinformation, unreliable data, and bad marketing strategies are running rampant. As a consultant, researcher, and speaker,

Jay has worked with leading brands, tech companies, and marketing teams to bridge the gap between AI innovation and real-world application.

🎤 Available for Speaking & Consulting

Need a speaker for your next conference, workshop, or executive training session? Jay is available for keynotes, panels, and in-depth consulting.

📩 Book Jay today – Email: jay@yourbrand.coach or visit jaymandel.com to learn more.

💡 Want to future-proof your business against AI misinformation? Let’s talk.

No way, Grok (an Elon Musk offering) is the most unreliable?!?!?! Who would have ever guessed?

I'm pleased to know my AI chatbot of choice (Perplexity) is the most reliable in terms of source accuracy, but even it leaves room for significant improvement.

My assumption is people aren't going to bother checking AI answer sources for the same reason people believe what they see on the internet absent source verification and validation, especially if the AI gives an answer you like. Have to be weary of confirmation bias!